Stop Fearing AI

When we appreciate a creative work, we often sense an intangible bond with its creator. Although challenging to articulate, this connection is integral and often profoundly meaningful to the overall experience. However, this connection feels hollow when we learn that the creator is AI—it leaves us feeling deceived and is likely why so many people feel uncomfortable with generative AI.

We are in the midst of the 5th Industrial Revolution. Like prior revolutions, this will change how we work and live, and it’s not going away any more than the automobile, electricity, or the internet went away. It is reshaping our professional and daily lives. You can rail against it or embrace it, finding ways for it to enrich your life.

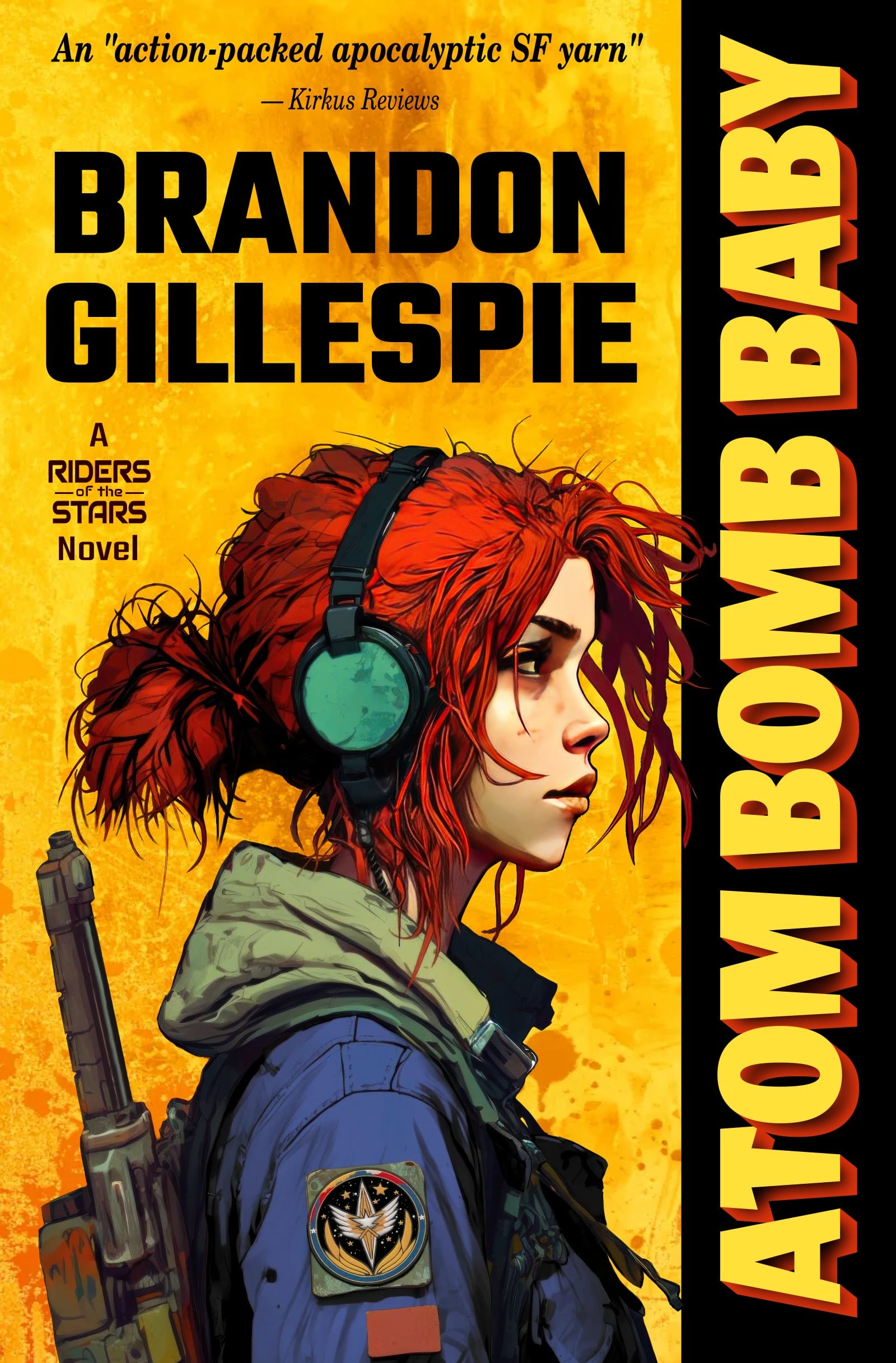

I used AI as a tool to aid in creating the cover image for my novel, Atom Bomb Baby; this article delves into my thoughts around this topic.

AI is a Creative Assistant

Good creative people don’t need to fear AI because AI typically creates mundane, confusing, or surreal works, and AI struggles to judge whether the result is good or not—however, this judgment is second nature for a human.

Will AI revolutionize our work processes? Undoubtedly. Will AI displace specific jobs? Certainly, and at the same time, it will also create new jobs.

Many of those who express apprehension towards AI tend to project exaggerated notions of its capabilities over what it actually is. Think of AI as a versatile tool — a sort of neurotic assistant that excels at brainstorming, tackling mundane tasks in your creative process, and much more.

AI Cannot Judge

AI, at its core, is simply a probability calculator. There are layers upon layers of complexities to make those calculations and algorithms into a usable tool, such as image generation. However, in the end, it ultimately relies on processing probabilities derived from human input—the humans are the ones who trained it on good or bad because it lacks the capacity for independent thought or judgment.

AI can evaluate what it is generating and choose the next step toward a final product that, based on the data humans trained it on, might best meet the target criteria it was given.

But, it cannot choose what to create. The scope of a human’s context is far beyond that of any AI—our entire history is part of everything we create.

Furthermore, this process of considering and judging while creating is more than a calculation like the AI would make. Often, that desired outcome isn’t even understood by the creator—it’s just a feeling they have, and the creation process leads them to that point.

What is AI Training?

If you’ve ever answered a captcha about “pick all squares with a traffic light,” you have also participated in training AI. All of this training goes into a mathematical abstracted data model of what is good and bad, which is then used with a bit of randomness to decide on probabilities.

This collective training data feeds into a model that discerns between what we consider ‘good’ and ‘bad,’ or ‘a cat’ vs. ‘a traffic light.’ Then, this model, along with a bit of randomness and a human prompt, is used to calculate probabilities while generating something new.

Similarly, with humans, mastery isn’t attained overnight—it demands years of practice and studying a wide variety of works.

Consider a seasoned artist painting a woman sitting with a serene countryside in the background. With each brush stroke, the artist ponders: Is this stroke good? Did I make a mistake? Should I adjust the color? Does it feel right? It’s a process steeped in self-evaluation, emotion, and artistic judgment.

In contrast, AI lacks inherent intelligence, let alone emotional capabilities — it is merely executing mathematical calculations. An AI model trained on the same artistic body lacks any emotionally introspective capability. Instead, it operates on probabilities, iteratively refining a blurry image, guided by the model’s understanding of the mathematical patterns that might best fit what the human has asked for. Similarly, in the realm of large language models (ChatGPT), the AI predicts the next probable word based on input data and model parameters.

The training phase of a model is when the notion of ‘good’ or ‘bad’ is introduced, albeit in a generalized sense, as it doesn’t recall the actual data it was trained on, but rather the impressions of that data matching the labels and inputs given by the humans.

This last point is particularly important. Despite being trained on massive amounts of data, the resulting model is only a fraction of this size. The common standard diffusion model has nearly a petabyte of images (uncompressed), but in the end, the resulting model file is only two gigabytes. This is thousands of times smaller! Imagine each gigabyte as a day. Starting in 2023, it would take over two millennia to accumulate the equivalent of a petabyte of days, ending in the year 4762. There is simply no way it can contain the original works in such a small space. It only keeps the impressions of said work—much like humans do.

This ratio of actual image data to the impressions stored within the model helps demonstrate that AI isn’t simply “copying and pasting” what it read or saw any more than a human does when they create a new work. It simply cannot store all that image data in such a small size.

Embracing AI

As a writer, graphic designer, and software engineer, my journey with AI and neural networks predates the AI boom. When Midjourney came around in 2022, I was at the point of needing to find an artist for my book Atom Bomb Baby. Because the book is in a genre that has no novels, just a lot of video games (such as The Outer Worlds and Fallout), there isn’t a typical way for a cover to look. I considered making something like a 1950s movie poster, but I needed some reference examples. To help with this art direction, I decided to take Midjourney out for a spin.

Meanwhile, I continued trying to find an artist. But as the weeks and months went by, I struggled to find an artist with a look and quality at the level I desired and who was also available on the timeline I needed (<6 months).

As Midjourney improved, I then started exploring the possibility of using it for more than concept art direction. As an artist, I do better with static work than drawing people—yet with Midjourney, I could use it to bring together a person better than I could on my own, and that’s what I did. I embarked on a meticulous process, integrating pieces from thousands of renders to craft the main character’s profile on the cover (Ashe). I spent more time working on the cover than I did in writing the book!

Yet, without AI helping in this manner, I would not have been able to create the cover as it is now. Again, I’d love to find an artist to help in these things (using AI or not as part of their toolset). If you feel you could meet the same quality and are available, don’t hesitate to reach out — I’d much rather devote my time to writing.

AI-Generated Copyrighted Work

Can AI generate copyright-violating content? Absolutely.

Yet, just like you can generate forgeries with any other tool, that tool’s involvement doesn’t alter the legal ramifications of the result. Violating copyright remains a violation, regardless of the tool used—be it pencils, a typewriter, or an AI renderer.

Consider this: when a forgery or copyright-violating work arises, do you blame the paintbrush or the painter wielding that brush? Similarly, the responsibility lies with the individual utilizing AI to produce unauthorized reproductions.

There are many articles talking about how somebody made AI generate a copyrighted thing, yet all of those I’ve seen are potentially fraudulent in how misconstrued they make it look. It is far easier to create a copyright-violating picture in Photoshop than to coerce a generative AI model to do the same, so why don’t the same articles similarly lambast Photoshop?

AI’s Influence on Artistic Integrity

Should AI replicate the essence of another artist’s work? It’s a complex question with implications reaching into the heart of artistic integrity.

As humans, when we engage with art, we internalize impressions that later influence our creative endeavors. If we draw upon these impressions in our work, are we infringing upon the original artist? Are we stealing from the original artist when we make our own works?

AI operates similarly.

The ethical conundrum lies in using AI trained on other creator’s work. Should this be acceptable? To answer this, I’d ask: is it acceptable for you to study somebody else’s work and then create something inspired by that?

The following argument might lead to “The AI was illegally trained!”—but, that is a separate part of the debate, so please keep these two crucial points separated.

Assuming you felt the AI model was trained ethically, then there should be no difference in whether you studied something to create something new or if a mathematical model does the same using probabilities.

Copyright

First, I am not a lawyer. When it comes to anything herein that may be construed as legal advice, please check with an actual attorney. I’m explaining how I perceive it, which may or may not be correct.

Like it or not, copyright only protects a specific embodiment of a creative work—the text of an article or the actual painting made by an artist; it does not protect the essence of that work. By its very name, this protection is about copies and who owns the right to those copies.

But when does something change enough to be legally copied without violating a copyright?

In the USA, people implicitly have a copyright on anything they create (or the person paying you, in a work-for-hire scenario). But if it’s generated by an AI at the prompting of a human, who owns that new work? Because we’re in a new industrial revolution, our laws have not yet caught up, so we need to consider things only within existing IP laws.

Two key definitions of IP law should be understood when dealing with copyrights: derivative and transformative. Suppose you take somebody’s work and make a few changes, but the original work is still clearly a part of the result. In that case, this is likely to be considered derivative, and the original copyright holder still has a claim to your created output—regardless of the tool you used to make that work.

However, if you work on it and transform it into something different “enough” (and enough is NOT clearly defined in the law), it becomes transformative, and this new result is your work.

The reason this is important is that there are a lot of questions about copyrights on the unchanged output of generative AI. Since the Midjourney tool cannot own copyright any more than Photoshop can, who owns the output?

There are aspects of copyright law that make correlations with the tools used during the creation of a thing, so perhaps these will ultimately come into play (using an employer’s specialized tooling to create a personal thing, even after hours, may make the ownership of that thing the employer’s, not yours).

Until the question around unaltered generative output is resolved, I suggest any creators transform it until you’ve made it yours. Frankly, this is better anyway, as it helps elevate the output from the typically banal, mundane, and voiceless content into something better—that is the essential human touch.

Furthermore, this very conundrum may be the downfall of those wishing to use AI to replace creatives. If Warner Brothers used AI to generate the next Batman movie, who would own the copyright to that movie?

Unfortunately, this is an incredibly nuanced challenge and is rather difficult to unravel in a short article, but it comes down to how you use it. If you’re using AI to generate something from simply a prompt and do nothing more with that generated output, then it’s somewhat questionable where that copyright may lie.

While the intricacies of copyright law may be daunting, the guiding principle remains: make the creation your own, weaving together elements from any source until it embodies your unique vision.

Does AI Steal?

This is the heart of the discomfort around AI for many creative people. However, the legality around using content to train AI is a nuanced issue.

Assuming the acquisition of training data was lawful (including scraping from public websites), current laws do not prohibit using it to train AI. This is because the AI doesn’t keep copies of the actual content any more than you do when browsing a website—instead, it captures an impression, much like a human does.

Yet, this argument doesn’t sit well with creatives and copyright holders.

The dilemma stems from the abundance of freely accessible content on the internet, typically lacking clear usage guidelines. Does simply having access to something give somebody the right to use it with training AI?

While downloading publicly available images may not violate copyright laws, reproducing them is still illegal (per copyright). But is it illegal to retain the imperfect impression of something? AI isn’t copying and retaining perfect copies of the images or text it’s trained on—it’s keeping imprecise transformative impressions as part of a mashed-together mathematical model. Thus, it’s also not doing anything against the current expectations. Remember the argument about transformative works and copyright? By the very nature of creating an imperfect abstraction, this data is transformed, not derived.

The other facet of this problem lies in the way so much content is freely available on the internet. Typically, one uses a license or EULA to explain how one wants the content of their website to be used, but on public websites, there is often no clearly defined license of use. Even if the bottom of a webpage has a license link, that’s a separate file from the actual content, and there’s no way to be sure if the user saw and accepted the license.

In the USA, recent intellectual property law cases have grappled with the issue of publicly available content and its permissible use. The prevailing consensus is that without a direct attachment of a EULA to the content, enforcing limitations on its use is challenging. To enhance legal protection, content creators are advised to place their works behind a username/password barrier and provide users with clear usage licenses. Content owners who post their content in this public and laissez-faire manner have little ground to stand on.

The cases currently in court will help refine this for the future. However, it is unlikely that any settlements will be made over past use where the data was acquired legally (including downloading from a public website).

This is likely where we’ll see the most clarifications regarding default expectations for copyrighted works presented for viewing or reading purposes—should this include the viewing and reading to train a model?

In the interim, ethical AI tools respect site-specific flags denoting whether content can be used for AI training, but this is an imprecise thing, as there is no common standard—it’s part of the website itself, not the actual file containing the content.

One potential solution is embedding metadata into each file indicating the intended use. HTML could utilize a head tag, “Expected-Use,” images could include metadata headers, and similarly for other file types. These tags would specify known values such as ‘human consumption,’ ‘AI training,’ ‘no reproduction,’ and so forth.

Job Security in the Era of AI

Non-creative people have long had a contentious relationship with creative people, with the former often seeking to sideline the latter—AI is simply another step in this cycle. To those who devalue creative work, Generative AI seems like a holy grail—a way to remove a troublesome part of the process, in their mind.

This dynamic isn’t new to me. As a veteran in the tech industry, I’ve witnessed firsthand how non-creatives attempt to outsource creative work at a fraction of the cost—I expect the situation to play out similarly, which is likely why I don’t hold much fear around Generative AI.

My favorite analogy in this outsourcing argument is that the non-creatives in charge of a work effort desire to win the Super Bowl but don’t want to invest in the team to get to the Super Bowl. Instead, they hire local high school JV players, pat themselves on the back for saving money, and then are surprised when the team fails to perform.

Non-creative people believe or hope they can replace creative people with Generative AI, but they overlook a critical factor: the inability of AI to discern quality and understand the emotional connection. This is the realm where creatives shine. Businesses or studios banking on AI to replace creatives will face a harsh reality check as the quality of their output declines, alienating their audiences in the process. Meanwhile, the creatives they let go have moved onto a new studio, which then reclaims the lost audience.

Instead, studios that view AI as a tool utilized by creatives rather than a wholesale replacement will achieve great success, and creatives who adapt and integrate Generative AI into their workflow will thrive in this evolving landscape.

Going Forward

People like to know the extent to which AI-generated content contributes to a creation, ultimately because of that emotional connection an audience feels with the creator. Thus, knowing how much Generative AI was used in the creative process is essential for many audience members.

Employing AI as a tool in the editorial process has been commonplace for years across various mediums like images and photography. This integration is generally accepted as it enhances and supports the creator’s original vision.

I believe it is important to classify the way Generative AI was used in the creation of a thing. Having AI used as a tool to help in the editorial process has been done for some time, even using AI; the introduction of Generative AI in this process is simply refining those very tools. The same is true for images, photography, and more. This is fine for most people because it enhances and helps a creator with their original works without generating entirely new works from scratch.

Where people feel uncomfortable is when AI-generated content is presented unethically. Either it’s shown as if it were created by a human, which is disingenuous, or worse, it’s used like a Deep Fake, where AI is used in an act of fictional forgery, where something is made to mimic the style of a popular creator and then passed off as authentic. This deceptive practice not only misrepresents the origin of the content but also undermines the integrity of the creative process.

Unfortunately, there’s a lot of ambiguity surrounding the distinction between AI-generated content and AI used in many ways as a tool in the creative process.

Take, for instance, my written work. Atom Bomb Baby was penned before the existence of ChatGPT, and I can assure you that there is no AI-generated content present within it. Yet I’m also working on the follow-up, and now that Chat GPT exists, people may ask: does it include AI-generated content?

As a writer, my pride lies in crafting authentic prose, and I have no intention of incorporating AI-generated content into my writing. However, I find AI invaluable for ideation, brainstorming, aiding in world-building, providing editorial feedback, and even offering suggestions for thematic names or simply being a better thesaurus!

As for the cover? I’ll continue to use AI to assist in creating the art for those as well, or an artist collaborating on the cover may do so as well.

AI-Generated content emerges solely from AI without human intervention, while AI-Assisted content involves human adjustments or touch-ups during the editorial process. For instance, while Grammarly assists me in editing, its role is distinct from AI-generated content, even where it’s generating suggested variations on my prose.

I suggest creators start clarifying how they use these tools. AI-Generated content emerges from AI and may have some adjustments or touch-ups. The human may have guided the creation of this content, but in the end, it’s still AI-Generated. The use of AI-Tools covers the other end of the spectrum, where a creator’s sketches or prose is fed into a tool that can help enhance that work. Between these two is AI-Assisted, where AI-Generated content has been used in small or large parts but is touched and transformed by a human to a significant degree.

Since I use Grammarly in editing, AI has touched ALL of my writing as a tool. I don’t even think it’s necessary to disclose this because so many people are already using AI Tools without knowing it.

Perhaps, similar to adding a convention of an “Expected Use” tag, we could add a disclosure to works that disclose a percentage of each type of use?

Atom Bomb Baby prose: Generative AI: 0%; Assistive AI 0%

Atom Bomb Baby Cover Art: Generative AI: 20%; Assistive AI: 50%

And the big question: How much Generative AI was used in creating this article? None. AI Tools, however, were used to edit this article.

Expected use: human consumption, ai training.